This post explores the interplay between artificial intelligence (AI), ethics, and information security. As AI becomes increasingly pervasive and processes vast amounts of personal data, strong security controls are essential to prevent misuse and to uphold ethical boundaries.

Before implementing any AI initiative within an organization, it is important to highlight historical concerns around AI ethics, the significant overlap between ethical and security responsibilities, and the need for clearly defined roles and close collaboration among stakeholders. These elements are critical for addressing risks such as privacy violations, data leakage, and unauthorized access.

Ultimately, organizations must adopt proactive, integrated approaches that treat AI security and ethics as inseparable disciplines. In this domain, a culture of cautious design and continuous oversight is far preferable to the costly consequences of neglect.

The critical points we need to focus on are:

- AI’s Ubiquity and the Security Question: AI is integrated into daily life (e.g., phones, pharmacies, airports, cars) without user consent, collecting sensitive location and activity data. The author questions how this data is handled and stresses the need for secure management, authorizing access only to necessary parties and monitoring for abuse.

- Privacy as a Fundamental Concern: Personal information gathered by AI should remain private, rooted in laws (varying by jurisdiction) rather than inherent rights. Unauthorized access or use violates individual privacy, and AI systems must be designed to protect this.

- Ethical Foundations of AI Use: Drawing from Aristotle, the Ten Commandments, and the Quran, the article posits that using others’ information without permission is inherently wrong. AI itself lacks ethical awareness—it’s a tool created by fallible humans who must embed ethical constraints, though the line between right and wrong can be blurry.

- Historical Context of AI Ethics: Concerns date back to 1920 (Karel ?apek’s R.U.R.), 1942 (Isaac Asimov’s laws of robotics), 1956 (early AI theorists on responsibility), and 1985 (James Moor’s “Computer Ethics”). These emphasize societal discussions, ethical frameworks, and safeguards for responsible AI development.

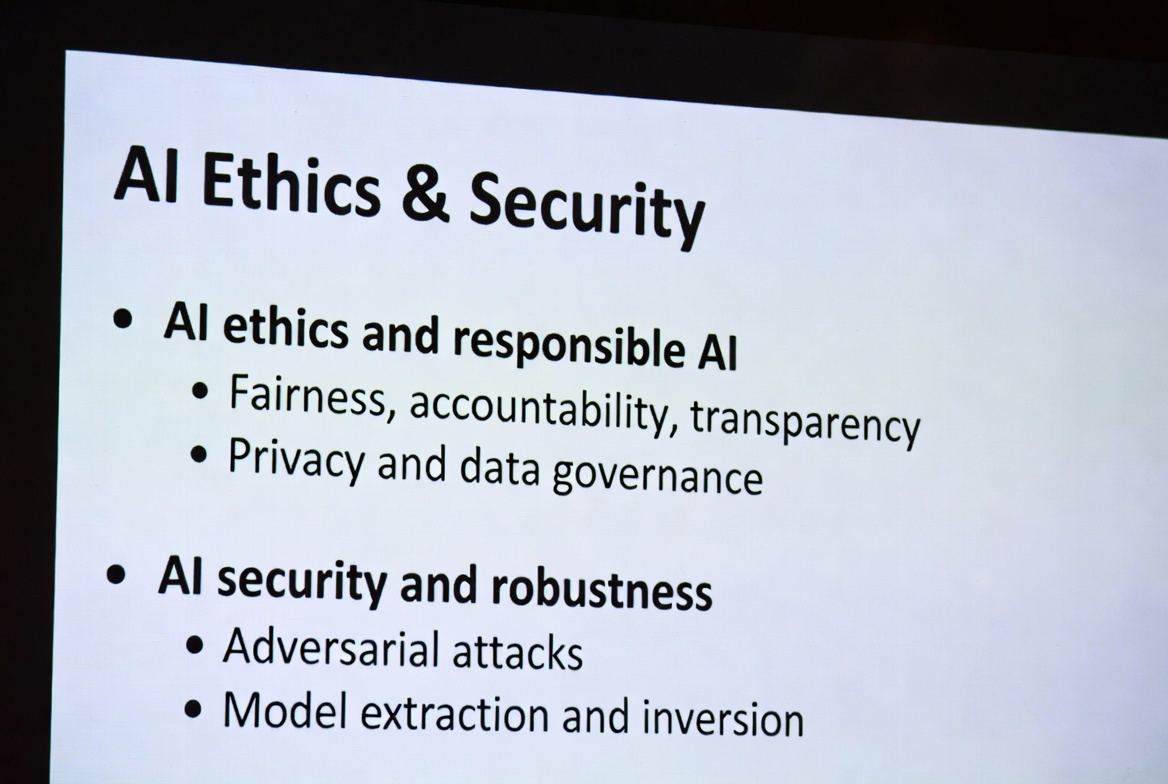

- Overlap Between AI Ethics and Security: Many organizations have AI Ethics Committees addressing issues like bias, privacy violations, harm, and legal compliance—areas that intersect with information security. A system cannot be ethical without security (e.g., guardrails can be bypassed if insecure), but an unethical system (e.g., for spying or tax evasion) might still be “secure” in a technical sense, raising philosophical questions.

- Practical Ramifications and Role Demarcation: Key issues include: Who handles disclosures of secrets or personal data? Who controls access to AI models, data, and algorithms? Who ensures recoverability if AI fails in novel roles? Some problems (e.g., racist/misogynistic outputs) are purely ethical, while others (e.g., access controls) are security-focused, but many are gray areas requiring input from both.

- Call for Collaboration: These issues should have been addressed before AI adoption but are urgent now. Simply adding the CISO to ethics committees or vice versa isn’t enough; true cooperation and breaking down silos are needed. The author prefers excessive attention to ethical-security matters over insufficient oversight as organizations advance in AI.